Abstract

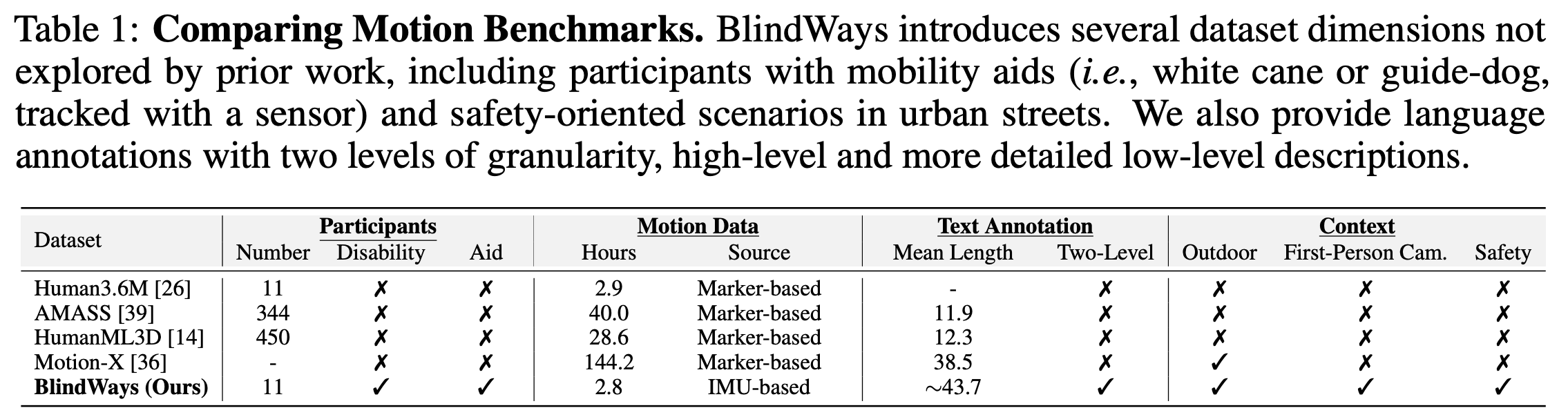

People who are blind perceive the world differently than those who are sighted. This often translates to different motion characteristics; for instance, when crossing at an intersection, blind individuals may move in ways that could potentially be more dangerous, eg, exhibit higher veering from the path and employ touch-based exploration around curbs and obstacles that may seem unpredictable. Yet, the ability of 3D motion models to model such behavior has not been previously studied, as existing datasets for 3D human motion currently lack diversity and are biased toward people who are sighted. In this work, we introduce BlindWays, the first multimodal motion benchmark for pedestrians who are blind. We collect 3D motion data using wearable sensors with 11 blind participants navigating eight different routes in a real-world urban setting. Additionally, we provide rich textual descriptions that capture the distinctive movement characteristics of blind pedestrians and their interactions with both the navigation aid (eg, a white cane or a guide dog) and the environment. We benchmark state-of-the-art 3D human prediction models, finding poor performance with off-the-shelf and pre-training-based methods for our novel task. To contribute toward safer and more reliable autonomous systems that reason over diverse human movements in their environments, here is the public release of our novel text-and-motion benchmark.

Video

Acknowledgments

We thank the Rafik B. Hariri Institute for Computing and Computational Science and Engineering (Focused Research Program award #2023-07-001) and the National Science Foundation (IIS-2152077) for supporting this research. Hernisa Kacorri was supported by the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR), ACL, HHS (grant #90REGE0024) and NSF (grant #2229885).

BibTeX

@inproceedings{kim2024,

title={Text to Blind Motion},

author={Kim, Hee Jae and Sengupta, Kathakoli and Kuribayashi, Masaki and Kacorri, Hernisa and Ohn-Bar, Eshed},

booktitle={NeurIPS},

year={2024}

}